Bouchet

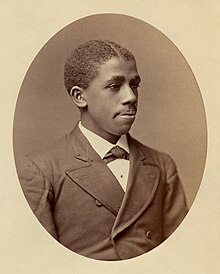

The Bouchet HPC cluster is YCRC's first installation at Massachusetts High Performance Computing Center (MGHPCC). Bouchet contains approximately 10,000 direct-liquid-cooled cores as well as 80 NVIDIA H200 GPUs from the AI Initiative and 48 NVIDIA RTX 5000 ADA GPUs. Bouchet is composed of 64 core nodes each with 1TB of RAM for general purpose compute and 4TB RAM large memory nodes for memory intensive workloads. Bouchet also has a dedicated “mpi” partition specifically designed for tightly-coupled parallel workloads. Bouchet is named for Dr. Edward Bouchet (1852-1918), the first self-identified African American to earn a doctorate from an American university, a PhD in physics at Yale University in 1876.

Bouchet is the successor to both Grace and McCleary, with the majority of HPC infrastructure refreshes and growth deployed at MGHPCC going forward. As the YCRC transitions from the Yale West Campus Data Center to the MGHPCC, we will be decommissioning Grace and McCleary in 2026 and all workloads on those systems be moved to Bouchet. All new YCRC purchases (such as the annual compute refresh or the Provost’s AI Initiative GPUs) will be installed at MGHPCC. In 2026, the older equipment on Grace and McCleary will be retired and most compute resources still under warranty will be added to Bouchet. More information about the decommission of Grace and McCleary is available on the Decommission Page. We will be engaging with faculty and users to ensure a smooth transition and minimize disruptions to critical work.

We welcome any researchers to move their workloads to Bouchet at their convenience between now and then to take advantage of Bouchet’s newer, faster and more powerful computing resources. YCRC staff is available to assist (you can contact us at research.computing@yale.edu.

Access the Cluster

Get Started on Bouchet

Please see the Bouchet Getting Started for more information on key differences about Bouchet compared to older YCRC clusters.

Once you have an account, the cluster can be accessed via ssh or Open OnDemand at https://ood-bouchet.ycrc.yale.edu.

System Status and Monitoring

For system status messages and the schedule for upcoming maintenance, please see the system status page. For a current node-level view of job activity, see the cluster monitor page (VPN only).

Installed Applications

A large number of software and applications are installed on our clusters. These are made available to researchers via software modules.

Available Software Modules (click to expand)

| Package | Versions |

|---|---|

| APR | 1.7.5 |

| APR-util | 1.6.3 |

| ATK | 2.38.0 |

| Armadillo | 11.4.3 |

| Autoconf | 2.71,2.72 |

| Automake | 1.16.5,1.16.5 |

| Autotools | 20220317,20231222 |

| AxiSEM3D | 2024Oct16 |

| BLIS | 0.9.0 |

| BeautifulSoup | 4.11.1 |

| Bison | 3.8.2,3.8.2,3.8.2 |

| Boost | 1.81.0,1.81.0 |

| Brotli | 1.0.9 |

| Brunsli | 0.1 |

| CESM | 2.1.3,2.1.3 |

| CESM-deps | 2,2 |

| CFITSIO | 4.2.0 |

| CMake | 3.24.3 |

| CP2K | 2023.1 |

| CUDA | 12.1.1 |

| Check | 0.15.2 |

| DB | 18.1.40 |

| DBus | 1.15.2 |

| Doxygen | 1.9.5 |

| ELPA | 2022.05.001 |

| ESMF | 8.3.0,8.3.0 |

| EasyBuild | 4.9.3,4.9.4 |

| Eigen | 3.4.0 |

| FFTW | 2.1.5,2.1.5,3.3.10,3.3.10 |

| FFTW.MPI | 3.3.10 |

| FFmpeg | 5.1.2 |

| FHI-aims | 231212_1 |

| FLAC | 1.4.2 |

| FlexiBLAS | 3.2.1 |

| FriBidi | 1.0.12 |

| GCC | 12.2.0 |

| GCCcore | 12.2.0,13.3.0 |

| GDAL | 3.6.2 |

| GDRCopy | 2.3.1 |

| GEOS | 3.11.1 |

| GLPK | 5.0 |

| GLib | 2.75.0 |

| GMP | 6.2.1 |

| GObject-Introspection | 1.74.0 |

| GROMACS | 2023.3 |

| GSL | 2.7 |

| GTK3 | 3.24.35 |

| Gdk-Pixbuf | 2.42.10 |

| Ghostscript | 10.0.0 |

| HDF | 4.2.15 |

| HDF5 | 1.14.0,1.14.0,1.14.0 |

| HPCG | 3.1 |

| HPL | 2.3 |

| HarfBuzz | 5.3.1 |

| Highway | 1.0.3 |

| ICU | 72.1 |

| IOR | 4.0.0,4.0.0 |

| IPython | 8.14.0 |

| ImageMagick | 7.1.0 |

| Imath | 3.1.6 |

| JasPer | 4.0.0 |

| Java | 11.0.16 |

| Julia | 1.10.4 |

| JupyterLab | 4.0.3 |

| JupyterNotebook | 7.0.3 |

| LAME | 3.100 |

| LAMMPS | 2Aug2023 |

| LERC | 4.0.0 |

| LLVM | 15.0.5 |

| LibTIFF | 4.4.0 |

| Libint | 2.7.2 |

| LittleCMS | 2.14 |

| M4 | 1.4.19,1.4.19,1.4.19 |

| MATLAB | 2023b |

| MDI | 1.4.16 |

| METIS | 5.1.0 |

| MPFR | 4.2.0 |

| Mako | 1.2.4 |

| Mesa | 22.2.4 |

| Meson | 0.64.0 |

| NASM | 2.15.05 |

| NLopt | 2.7.1 |

| Ninja | 1.11.1 |

| OSU-Micro-Benchmarks | 6.2 |

| OpenBLAS | 0.3.21 |

| OpenEXR | 3.1.5 |

| OpenJPEG | 2.5.0 |

| OpenMPI | 4.1.4,4.1.4 |

| OpenPGM | 5.2.122 |

| OpenSSL | 1.1 |

| PBZIP2 | 1.1.13 |

| PCRE | 8.45 |

| PCRE2 | 10.40 |

| PLUMED | 2.9.2 |

| PROJ | 9.1.1 |

| Pango | 1.50.12 |

| Perl | 5.36.0,5.38.2 |

| PnetCDF | 1.13.0,1.13.0 |

| PostgreSQL | 15.2 |

| PyYAML | 6.0 |

| Python | 3.10.8,3.10.8 |

| Qhull | 2020.2 |

| QuantumESPRESSO | 7.2 |

| R | 4.4.1 |

| R-bundle-CRAN | 2024.06 |

| Rust | 1.65.0 |

| SCons | 4.5.2 |

| SDL2 | 2.26.3 |

| SQLite | 3.39.4 |

| SWIG | 4.1.1 |

| ScaFaCoS | 1.0.4 |

| ScaLAPACK | 2.2.0 |

| SciPy-bundle | 2023.02 |

| Serf | 1.3.9 |

| Subversion | 1.14.3 |

| Szip | 2.1.1 |

| Tcl | 8.6.12 |

| Tk | 8.6.12 |

| TotalView | 2023.3.10 |

| UCC | 1.1.0 |

| UCX | 1.13.1,1.16.0 |

| UCX-CUDA | 1.13.1 |

| UDUNITS | 2.2.28 |

| UnZip | 6.0 |

| VASP | 6.4.2 |

| VTK | 9.2.6 |

| Voro++ | 0.4.6 |

| Wannier90 | 3.1.0 |

| X11 | 20221110 |

| XML-LibXML | 2.0208 |

| XZ | 5.2.7 |

| Xerces-C++ | 3.2.4 |

| Xvfb | 21.1.6 |

| Yasm | 1.3.0 |

| ZeroMQ | 4.3.4 |

| archspec | 0.2.0 |

| arpack-ng | 3.8.0 |

| at-spi2-atk | 2.38.0 |

| at-spi2-core | 2.46.0 |

| awscli | 2.17.51 |

| binutils | 2.39,2.39,2.42,2.42 |

| bzip2 | 1.0.8 |

| cURL | 7.86.0 |

| cairo | 1.17.4 |

| elbencho | 2.0,3.0 |

| expat | 2.4.9 |

| ffnvcodec | 11.1.5.2 |

| fio | 3.34 |

| flex | 2.6.4,2.6.4,2.6.4 |

| fontconfig | 2.14.1 |

| foss | 2022b |

| freetype | 2.12.1 |

| gettext | 0.21.1,0.21.1 |

| gfbf | 2022b |

| giflib | 5.2.1 |

| git | 2.38.1 |

| gompi | 2022b |

| googletest | 1.12.1 |

| gperf | 3.1 |

| groff | 1.22.4 |

| gzip | 1.12 |

| h5py | 3.8.0 |

| help2man | 1.49.2,1.49.3 |

| hwloc | 2.8.0 |

| hypothesis | 6.68.2 |

| iimpi | 2022b,2024a |

| imkl | 2022.2.1,2024.2.0 |

| imkl-FFTW | 2022.2.1,2024.2.0 |

| impi | 2021.7.1,2021.13.0 |

| intel | 2022b,2024a |

| intel-compilers | 2022.2.1,2024.2.0 |

| intltool | 0.51.0 |

| iomkl | 2022b |

| iompi | 2022b |

| jbigkit | 2.1 |

| json-c | 0.16 |

| jupyter-server | 2.7.0 |

| kim-api | 2.3.0 |

| lftp | 4.9.2 |

| libGLU | |

| libaio | |

| libarchive | |

| libdeflate | |

| libdrm | |

| libepoxy | |

| libfabric | |

| libffi | |

| libgeotiff | |

| libgit2 | |

| libglvnd | |

| libiconv | |

| libjpeg-turbo | |

| libogg | |

| libopus | |

| libpciaccess | |

| libpng | |

| libreadline | |

| libsndfile | |

| libsodium | |

| libtirpc | |

| libtool | |

| libunwind | |

| libvorbis | |

| libvori | |

| libxc | |

| libxml2 | |

| libxslt | |

| libxsmm | |

| libyaml | |

| lxml | 4.9.2 |

| lz4 | 1.9.4 |

| make | 4.3 |

| maturin | 1.1.0 |

| miniconda | 24.7.1 |

| mpi4py | 3.1.4 |

| ncurses | 6.3,6.3 |

| netCDF | 4.9.0,4.9.0,4.9.0 |

| netCDF-C++4 | 4.3.1,4.3.1 |

| netCDF-Fortran | 4.6.0,4.6.0 |

| nettle | 3.8.1 |

| networkx | 3.0 |

| nlohmann_json | 3.11.2 |

| nodejs | 18.12.1,20.11.1 |

| numactl | 2.0.16,2.0.18 |

| patchelf | 0.17.2 |

| pigz | 2.7 |

| pixman | 0.42.2 |

| pkg-config | 0.29.2 |

| pkgconf | 1.8.0,1.9.3,2.2.0 |

| pkgconfig | 1.5.5 |

| pybind11 | 2.10.3 |

| ruamel.yaml | 0.17.21 |

| scikit-build | 0.17.2 |

| tbb | 2021.10.0 |

| utf8proc | 2.8.0 |

| util-linux | 2.38.1 |

| x264 | 20230226 |

| x265 | 3.5 |

| xorg-macros | 1.19.3 |

| xxd | 9.0.1696 |

| zlib | 1.2.12,1.2.12,1.3.1,1.3.1 |

| zstd | 1.5.2 |

Partitions and Hardware

Public Partitions

See each tab below for more information about the available common use partitions.

Use the day partition for most batch jobs. This is the default if you don't specify one with --partition.

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --mem-per-cpu=5120

Job Limits

Jobs submitted to the day partition are subject to the following limits:

| Limit | Value |

|---|---|

| Maximum job time limit | 1-00:00:00 |

| Maximum CPUs per group | 2000 |

| Maximum memory per group | 30000G |

| Maximum CPUs per user | 1200 |

| Maximum memory per user | 18000G |

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | Node Features |

|---|---|---|---|---|

| 83 | cpugen:emeraldrapids | 64 | 990 | cpugen:emeraldrapids, cpumodel:8562Y+, common:yes |

Use the devel partition to jobs with which you need ongoing interaction. For example, exploratory analyses or debugging compilation.

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --mem-per-cpu=5120

Job Limits

Jobs submitted to the devel partition are subject to the following limits:

| Limit | Value |

|---|---|

| Maximum job time limit | 06:00:00 |

| Maximum CPUs per user | 16 |

| Maximum submitted jobs per user | 2 |

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | Node Features |

|---|---|---|---|---|

| 4 | cpugen:emeraldrapids | 64 | 990 | cpugen:emeraldrapids, cpumodel:8562Y+, common:yes |

Use the week partition for jobs that need a longer runtime than day allows.

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --mem-per-cpu=5120

Job Limits

Jobs submitted to the week partition are subject to the following limits:

| Limit | Value |

|---|---|

| Maximum job time limit | 7-00:00:00 |

| Maximum CPUs per group | 256 |

| Maximum memory per group | 3840G |

| Maximum CPUs per user | 128 |

| Maximum memory per user | 1920G |

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | Node Features |

|---|---|---|---|---|

| 4 | cpugen:emeraldrapids | 64 | 990 | cpugen:emeraldrapids, cpumodel:8562Y+, common:yes |

Use the gpu partition for jobs that make use of GPUs. You must request GPUs explicitly with the --gpus option in order to use them. For example, --gpus=rtx_5000_ada:2 would request 2 NVIDIA RTX 5000 Ada GPUs per node.

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --mem-per-cpu=5120

GPU jobs need GPUs!

Jobs submitted to this partition do not request a GPU by default. You must request one with the --gpus option.

Job Limits

Jobs submitted to the gpu partition are subject to the following limits:

| Limit | Value |

|---|---|

| Maximum job time limit | 2-00:00:00 |

| Maximum GPUs per group | 32 |

| Maximum GPUs per user | 32 |

| Maximum running jobs per user | 32 |

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | GPU Type | GPUs/Node | vRAM/GPU (GB) | Node Features |

|---|---|---|---|---|---|---|---|

| 8 | cpugen:emeraldrapids | 48 | 479 | rtx_5000_ada | 4 | 32 | cpugen:emeraldrapids, cpumodel:6542Y, common:yes, gpu:rtx_5000_ada |

Use the gpu partition for jobs that make use of GPUs. You must request GPUs explicitly with the --gpus option in order to use them. For example, --gpus=h200:2 would request 2 NVIDIA H200 GPUs per node.

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --mem-per-cpu=5120

GPU jobs need GPUs!

Jobs submitted to this partition do not request a GPU by default. You must request one with the --gpus option.

Job Limits

Jobs submitted to the gpu_h200 partition are subject to the following limits:

| Limit | Value |

|---|---|

| Maximum job time limit | 2-00:00:00 |

| Maximum GPUs per group | 32 |

| Maximum GPUs per user | 16 |

| Maximum running jobs per user | 10 |

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | GPU Type | GPUs/Node | vRAM/GPU (GB) | Node Features |

|---|---|---|---|---|---|---|---|

| 9 | cpugen:emeraldrapids | 48 | 1995 | h200 | 8 | 141 | cpugen:emeraldrapids, cpumodel:6542Y, gpu:h200, common:yes |

Use the gpu_devel partition to debug jobs that make use of GPUs, or to develop GPU-enabled code.

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --mem-per-cpu=5120

GPU jobs need GPUs!

Jobs submitted to this partition do not request a GPU by default. You must request one with the --gpus option.

Job Limits

Jobs submitted to the gpu_devel partition are subject to the following limits:

| Limit | Value |

|---|---|

| Maximum job time limit | 06:00:00 |

| Maximum CPUs per user | 12 |

| Maximum GPUs per user | 1 |

| Maximum memory per user | 120G |

| Maximum running jobs per user | 1 |

| Maximum submitted jobs per user | 1 |

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | GPU Type | GPUs/Node | vRAM/GPU (GB) | Node Features |

|---|---|---|---|---|---|---|---|

| 1 | cpugen:emeraldrapids | 48 | 1995 | h200 | 8 | 141 | cpugen:emeraldrapids, cpumodel:6542Y, gpu:h200, common:yes |

| 4 | cpugen:emeraldrapids | 48 | 479 | rtx_5000_ada | 4 | 32 | cpugen:emeraldrapids, cpumodel:6542Y, common:yes, gpu:rtx_5000_ada |

Use the bigmem partition for jobs that have memory requirements other partitions can't handle.

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --mem-per-cpu=5120

Job Limits

Jobs submitted to the bigmem partition are subject to the following limits:

| Limit | Value |

|---|---|

| Maximum job time limit | 1-00:00:00 |

| Maximum CPUs per user | 128 |

| Maximum memory per user | 8000G |

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | Node Features |

|---|---|---|---|---|

| 4 | cpugen:emeraldrapids | 64 | 4014 | cpugen:emeraldrapids, cpumodel:8562Y+, common:yes |

Use the mpi partition for tightly-coupled parallel programs that make efficient use of multiple nodes. See our MPI documentation if your workload fits this description.

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --exclusive --mem=498688

Job Limits

Jobs submitted to the mpi partition are subject to the following limits:

| Limit | Value |

|---|---|

| Maximum job time limit | 2-00:00:00 |

| Maximum nodes per group | 32 |

| Maximum nodes per user | 32 |

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | Node Features |

|---|---|---|---|---|

| 60 | cpugen:emeraldrapids | 64 | 487 | cpugen:emeraldrapids, cpumodel:8562Y+, common:yes |

Use the scavenge partition to run preemptable jobs on more resources than normally allowed. For more information about scavenge, see the Scavenge documentation.

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --mem-per-cpu=5120

GPU jobs need GPUs!

Jobs submitted to this partition do not request a GPU by default. You must request one with the --gpus option.

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | GPU Type | GPUs/Node | vRAM/GPU (GB) | Node Features |

|---|---|---|---|---|---|---|---|

| 10 | cpugen:emeraldrapids | 48 | 1995 | h200 | 8 | 141 | cpugen:emeraldrapids, cpumodel:6542Y, gpu:h200, common:yes |

| 10 | cpugen:emeraldrapids | 32 | 488 | l40s | 4 | 48 | cpugen:emeraldrapids, cpumodel:6526Y, gpu:l40s, common:no |

| 10 | cpugen:emeraldrapids | 48 | 479 | rtx_5000_ada | 4 | 32 | cpugen:emeraldrapids, cpumodel:6542Y, common:yes, gpu:rtx_5000_ada |

| 4 | cpugen:emeraldrapids | 64 | 4014 | cpugen:emeraldrapids, cpumodel:8562Y+, common:yes | |||

| 60 | cpugen:emeraldrapids | 64 | 487 | cpugen:emeraldrapids, cpumodel:8562Y+, common:yes | |||

| 94 | cpugen:emeraldrapids | 64 | 990 | cpugen:emeraldrapids, cpumodel:8562Y+, common:yes |

Use the scavenge_gpu partition to run preemptable jobs on more GPU resources than normally allowed. For more information about scavenge, see the Scavenge documentation.

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --mem-per-cpu=5120

GPU jobs need GPUs!

Jobs submitted to this partition do not request a GPU by default. You must request one with the --gpus option.

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | GPU Type | GPUs/Node | vRAM/GPU (GB) | Node Features |

|---|---|---|---|---|---|---|---|

| 10 | cpugen:emeraldrapids | 48 | 1995 | h200 | 8 | 141 | cpugen:emeraldrapids, cpumodel:6542Y, gpu:h200, common:yes |

| 8 | cpugen:emeraldrapids | 48 | 479 | rtx_5000_ada | 4 | 32 | cpugen:emeraldrapids, cpumodel:6542Y, common:yes, gpu:rtx_5000_ada |

| 10 | cpugen:emeraldrapids | 32 | 488 | l40s | 4 | 48 | cpugen:emeraldrapids, cpumodel:6526Y, gpu:l40s, common:no |

Private Partitions

With few exceptions, jobs submitted to private partitions are not considered when calculating your group's Fairshare. Your group can purchase additional hardware for private use, which we will make available as a pi_groupname partition. These nodes are purchased by you, but supported and administered by us. After vendor support expires, we retire compute nodes. Compute nodes can range from $10K to upwards of $50K depending on your requirements. If you are interested in purchasing nodes for your group, please contact us.

PI Partitions (click to expand)

Request Defaults

Unless specified, your jobs will run with the following options to salloc and sbatch options for this partition.

--time=01:00:00 --nodes=1 --ntasks=1 --cpus-per-task=1 --mem-per-cpu=5120

GPU jobs need GPUs!

Jobs submitted to this partition do not request a GPU by default. You must request one with the --gpus option.

Job Limits

Jobs submitted to the pi_co54 partition are subject to the following limits:

| Limit | Value |

|---|---|

| Maximum job time limit | 7-00:00:00 |

Available Compute Nodes

Requests for --cpus-per-task and --mem can't exceed what is available on a single compute node.

| Count | CPU Type | CPUs/Node | Memory/Node (GiB) | GPU Type | GPUs/Node | vRAM/GPU (GB) | Node Features |

|---|---|---|---|---|---|---|---|

| 10 | cpugen:emeraldrapids | 32 | 488 | l40s | 4 | 48 | cpugen:emeraldrapids, cpumodel:6526Y, gpu:l40s, common:no |

Storage

Bouchet has access to one filesystem called Roberts. Roberts is an all-flash, NFS filesystem similar to the Palmer filesystem on Grace and McCleary. For more details on the different storage spaces, see our Cluster Storage documentation.

Your ~/project_pi_<netid of the pi> and ~/scratch_pi_<netid of the pi> directories are shortcuts.

Get a list of the absolute paths to your directories with the mydirectories command.

If you want to share data in your Project or Scratch directory, see the permissions page.

For information on data recovery, see the Backups and Snapshots documentation.

Warning

Files stored in scratch are purged if they are older than 60 days. You will receive an email alert one week before they are deleted. Artificial extension of scratch file expiration is forbidden without explicit approval from the YCRC. Please purchase storage if you need additional longer term storage.

| Partition | Root Directory | Storage | File Count | Backups | Snapshots | Notes |

|---|---|---|---|---|---|---|

| home | /home |

125GiB/user | 500,000 | Yes | >=2 days | |

| project | /nfs/roberts/project |

4TiB/group | 5,000,000 | Yes | >=2 days | |

| scratch | /nfs/roberts/scratch |

10TiB/group | 15,000,000 | No | No | |

| pi | /nfs/roberts/pi |

varies | vareis | No | >=2 days |